Bill Matthews

Challenge Description

This challenge aims to enhance your skills in using Large Language Models (LLMs) for everyday testing activities. Through well-crafted prompts, you will explore how AI can assist in generating test scenarios, detecting ambiguous requirements, or evaluating the quality of test cases.

Challenge Steps

This challenge is broken down into three levels, beginner, intermediate, and advanced, to suit your skill set. Each level is designed for you to practice and improve your skills in crafting effective prompts that guide LLMs to support your testing activities.

Beginner Level

- Generate Basic Test Scenarios: Create a prompt that generates a test scenario for a common requirement, such as signing up for an online platform like the Ministry of Testing (MoT). Focus on crafting a prompt that makes the LLM create a story-like scenario.

- Format-Specific Test Scenarios: Build on the previous task by specifying the output format. This could be Behavior Driven Development (BDD) syntax or a CSV file tailored for upload into a test management tool. See how the format changes the usefulness and clarity of the scenario.

- Explain It to Me Like I’m Five: Pick a topic you’d like to know more about - this could be test technique, a type of testing, or a new technology - then ask the LLM to explain it to you; have a conversation with the LLM about the topic asking further questions, requesting concrete examples, to provide additional explanations. Finally, summarise your understanding of the topic and ask the LLM to evaluate your understanding.

Intermediate Level

- Test Scenario Generation for Specific Requirements: Craft a prompt that outlines a set of requirements for testing a feature, such as a password complexity validator. Your prompt should lead the LLM to generate detailed test scenarios for both expected and edge cases.

- Requirement Analysis: Provide a set of requirements and prompt the LLM to identify any that are incomplete or ambiguous. Then, ask the LLM to assess the overall quality of the requirements. This hones your skills in using AI to improve requirement specifications.

- How Do I Test This?: Describe an application to an LLM and the key risks; then ask the LLM to produce a test strategy or approach for the system. Follow this up by asking the for further explanations, clarifications or justifications for parts of the generated strategy. Finally ask the LLM to summarise the test strategy or approach based on the conversation you just had.

Advanced Level

- Comparative Feature Analysis: Give the LLM two sets of requirements representing different versions of a feature. Your task is to craft a prompt that asks the LLM to summarise the changes and highlight the areas that need testing. This enhances your skill in leveraging AI to manage feature evolution effectively.

- Test Evaluation: Present a set of test cases and feature requirements to the LLM. Your prompt should guide the LLM in evaluating the completeness and quality of these tests, providing insights into how well the tests cover the requirements.

- LLMs Evaluating LLMs: Use an LLM to generate a set of scenarios for a feature. Then, either with the same LLM or a different one, craft a prompt to ask the LLM to assess the quality, completeness, and accuracy of these scenarios based on the feature requirements.

Tips:

- Experiment with different ways to frame your prompts to see what gives you the most useful responses.

- Pay attention to how the LLM’s responses vary based on the specificity and clarity of your prompts.

- Investigate how the use of personas change the quality of the LLM’s responses.

- Reflect on the strategies that led to the most successful interactions with the AI.

Challenge Solutions

To share your solutions and view other solutions that have been shared, click the button below.

What you’ll learn

- Apply Prompt Engineering techniques to communicate effectively with AI models for test activities

Resources

- Prompt Engineering Guide - DAIR.AI

- ChatGPT Prompt Engineering for Developers - DeepLearning.AI

- Prompt Engineering Guide - OpenAI

- AI-Assisted Testing - Manning

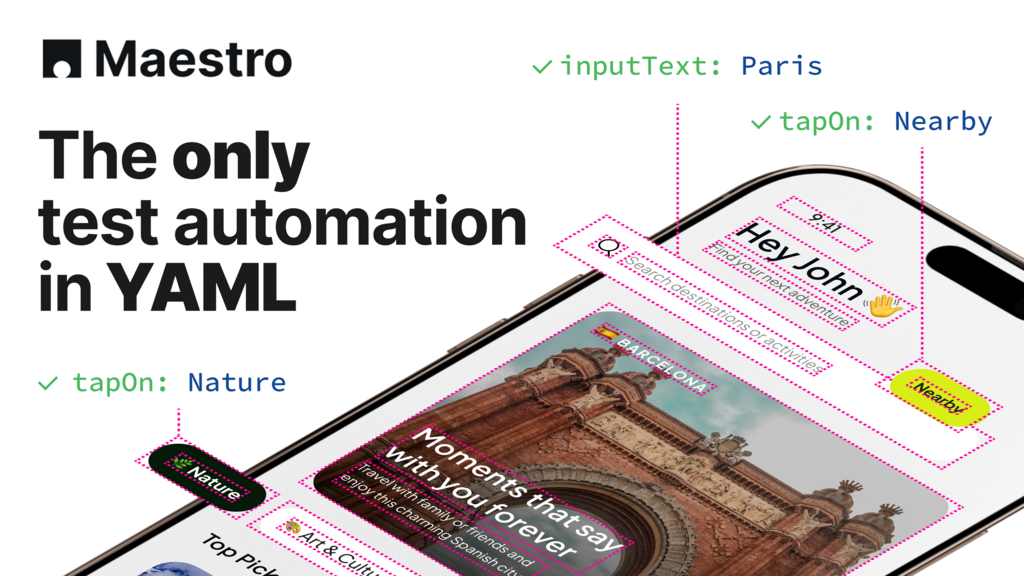

Create E2E tests visually. Get clear, readable YAML you can actually maintain.

Explore MoT

RiskStorming; Artificial Intelligence is a strategy tool that helps your team to not only identify high value risks, but also set up a plan on how to deal

Advanced prompting skills to turn AI into your trusted testing companion.

Into the MoTaverse is a podcast by Ministry of Testing, hosted by Rosie Sherry, exploring the people, insights, and systems shaping quality in modern software teams.