Rahul Parwal

Test Specialist

I am Open to Write, Teach, Speak, Mentor, CV Reviews

Rahul Parwal is a Test Specialist with expertise in testing, automation, and AI in testing. He’s an award-winning tester, and international speaker.

Want to know more, Check out testingtitbits.com

Achievements

Certificates

Awarded for:

Achieving 5 or more Community Star badges

Activity

awarded Barry Ehigiator for:

Loved the fab video by BBC featuring you. Superb production. and ofcourse, it also reflects your superb testing efforts.Barry = High Quality Level (for me).

earned:

This is Quality | BBC

earned:

12.4.0 of MoT Software Testing Essentials Certificate

earned:

12.3.0 of MoT Software Testing Essentials Certificate

earned:

And the Ambassadors of the MoTaverse for 2026 are... 🥁

Contributions

I'm super excited to anounce our 2026 Ambassadors!!

Make sure to follow them on the MoTaverse.

And the 2026 Ambassadors of the MoTaverse are...........

- Ady Stokes

- Ben Dowen

- Cassandr...

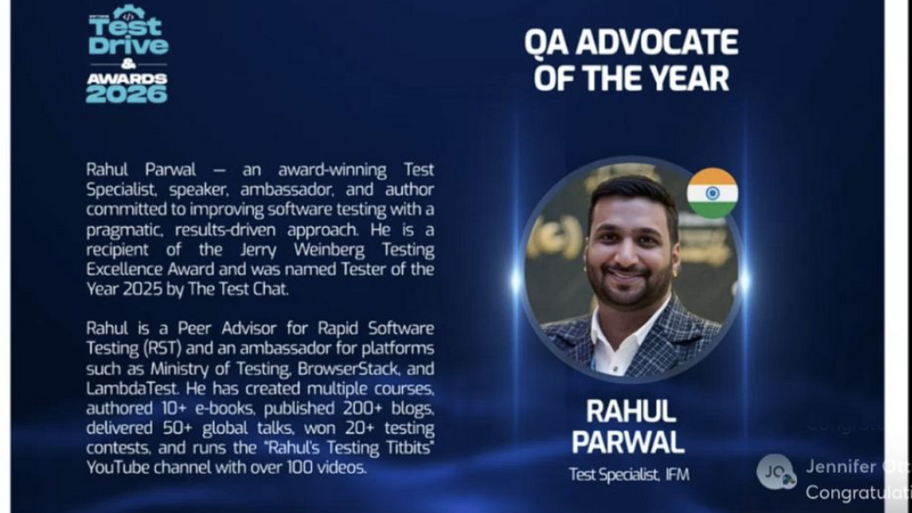

Humbled, grateful, and a little amazed.

Named 𝐐𝐀 𝐀𝐝𝐯𝐨𝐜𝐚𝐭𝐞 𝐨𝐟 𝐭𝐡𝐞 𝐘𝐞𝐚𝐫 at 𝘚𝘰𝘧𝘵𝘸𝘢𝘳𝘦 𝘛𝘦𝘴𝘵 𝘋𝘳𝘪𝘷𝘦 𝘈𝘸𝘢𝘳𝘥𝘴 2026 by Scandium Systems Inc.

Gratitude to my LinkedIn and #MoTaverse network for uplifting...

We wrapped up our first year of Ambassadoring, reflected on it, explored some ideas on how we can improve it.

As time came to the end, I was saying thank you and wasn't sure how to say goodbye,...

Happy New Year, 2026

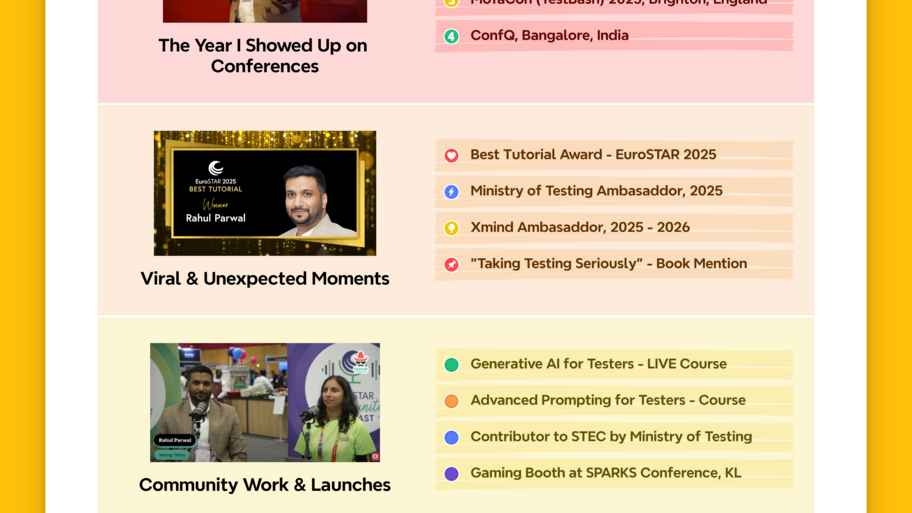

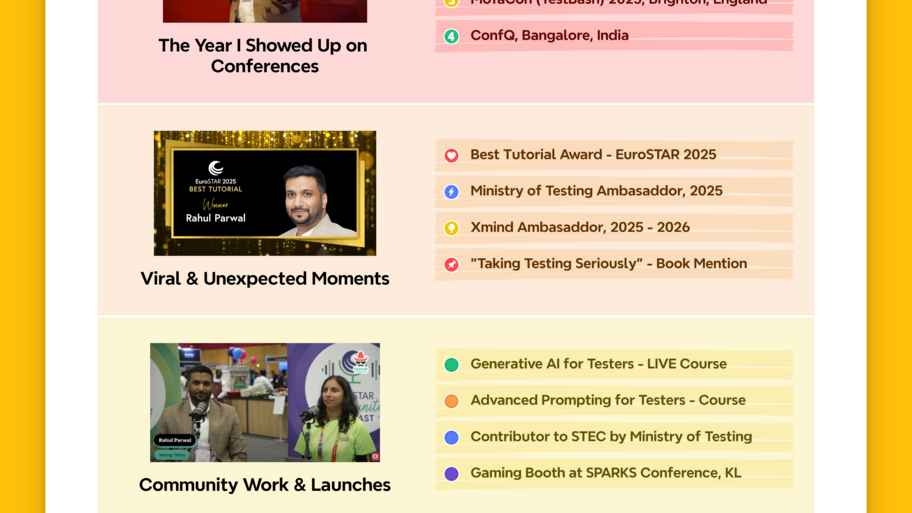

2025 didn’t happen to me. I showed up and built it.

Spoke across continents. Won Best Tutorial at EuroSTAR 2025. Got a book mention. Became an ambassador. Launched courses. Played where it matte...

2025 didn’t happen to me. I showed up and built it.

Spoke across continents. Won Best Tutorial at EuroSTAR 2025. Got a book mention. Became an ambassador. Launched courses. Played where it matte...