By Mirza Sisic

People who are new to software testing, and sometimes experienced testers as well, might have very different ideas about exploratory testing. With ideas ranging from “clicking around the app a bit” or “trying to break it” to using more structured techniques like “charter-based” testing. The former approaches can lead to people underestimating the importance and under-valuing the role of exploratory testing and confusing it with ad-hoc testing and error guessing.

By sharing personal experience-based observations and common best practices I plan to dispel some misconceptions and encourage new testers to try a more structured approach to exploratory testing and to also be able to explain the benefits of such an approach. How using things like test charters, having a clear mission for an exploratory testing session (such as choosing a part of the system to focus on) and time-boxing your session can help greatly improve your exploratory testing sessions to make them more productive and fruitful - enabling you to learn valuable info about the system under test, maybe even discover some hidden defects.

Confusing Exploratory Testing with Ad Hoc testing

In general, we use exploratory testing to gain insight into the system, to get more information and to learn more about the application we are exploring. Exploratory testing sessions are most fruitful when we narrow down our focus to a particular feature or a part of the system and we usually time box it to be under an hour - if our session gets too long our concentration will start to drop and we won’t be as efficient and may miss things. AdHoc testing on the other hand, has very little structure, we could be clicking around the app trying a lot of things that come to our mind at the moment in order to break some functionality and find bugs.

Adhoc testing is, generally, performed later in the development lifecycle, but exploratory testing can be performed much earlier - even if the UI is not yet developed we can explore the API, or even explore the specification/requirements if that’s all we have at the moment - and use techniques such as mind mapping to create visual learning notes. Adhoc testing is usually done for things like Bug Bounties, Bug Bashes and Hackathons.

Spending too much or too little time on planning your exploratory testing sessions

We can view exploratory testing as a sweet spot between old school scripted testing (writing test plans and test-cases with detailed step-by-step instructions) and adhoc testing (the opposite side of the spectrum, unplanned and unstructured) exploratory testing, done properly, strikes a balance between those two approaches, making it flexible and yet structured at the same time.

Creating a testing charter (derived from the Latin word charta, meaning "paper, card or map.") is usually not as time consuming as creating a detailed test plan, but we do need to set aside at least a few minutes to get a mental picture of the segment of the system we intend to focus on, so we can base our charter on that.

Understand the difference between exploratory testing and test-case based testing

The most obvious difference between exploratory testing and scripted testing (writing test cases) is that in scripted testing we have an expected outcome in mind - usually referred to as the expected result (something we are asserting on) whereas in exploratory testing the outcome is not always as clear - we can have a rough idea of what we could learn, or of what we want to learn, by exploring a part of our application.

Scripted testing is more used in traditional environments, where we have a (relatively) stable and well documented product, so writing test cases which when executed either pass or fail can add value. Exploratory testing on the other hand, works well when we, perhaps, do not have much knowledge of the system and we want to learn more or when the system is documented in another way, by using BDD specs with readable Gherkin syntax. Exploratory testing can be used effectively to supplement BDD.

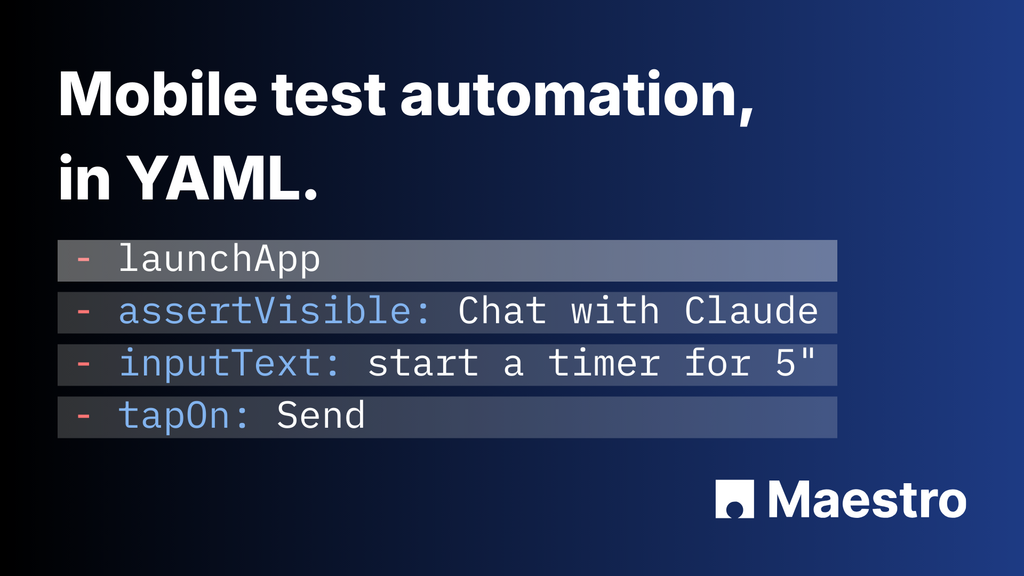

Test automation can be used as a way of documenting the system; this approach only works well if the automation is well maintained and properly architectured. The idea of using test automation as a form of documentation is well explained by Maaret Pyhäjärvi in this article.

Creating minimalist exploratory testing charters

Elisabeth Hendrickson, the author of the excellent Explore It!: Reduce Risk and Increase Confidence with Exploratory Testing book, suggests using a very concise format as the basis for our testing charters (you can, of course, extend upon it), but it works great even as a three-liner. The benefit being, you don’t burden yourself with preparing long testing docs, but still get the benefit of focusing your effort and allows an element of traceability.

Here is an example of how it would look:

-

Explore <registration functionality>

-

with <unconventional email formats>

-

to discover <edge cases not covered by form validation>

Exploratory testing notes as learning material

Exploratory testing sessions should not be just for the sake of exploring, we should be able to benefit from them through learning more about our system under test. Applying the right tooling can help us (and others) to learn more from our exploratory testing sessions. Creating mind-maps to visualize lessons learned provide us with a high-level overview of the different parts of the system, and we can incorporate each separate session into a larger visual representation of the whole system - like treating it as a piece of a bigger puzzle. Another thing is to use exploratory testing session management apps, as a lot of apps allow us to export our exploratory testing notes in different formats, along with our comments and screenshots, making these useful for reporting to stakeholders and demonstrating the value of exploratory testing. An example would be the Xray Exploratory testing app.

Using Heuristics as guidelines

Heuristics (guiding principles, or “rule of a thumb”) can provide more structure to our exploratory testing approach and save us some time in the long run. Here are a few heuristics which are well known and generally have proven quite useful to me in the past:

-

-

Structure (what the software is): What are its components?

-

Function (what the software does): What are its functions from both the business and user perspective?

-

Data (what the software processes): What input does it accept and what is the expected output? Is input sequence sensitive? Are there multiple modes-of-being?

-

Platform (what the software depends on): What operating systems does it run on? Does the environment specify a configuration to work? Does it depend on third-party components?

-

Operations (how the software will be used): Who will use it? Where and how will they use it? What will they use it for?

-

-

CRUD - quite useful in exploring APIs

-

Create - POST request

-

Read - GET request

-

Update - PUT request

-

Delete - Delete request

-

-

-

History. We expect the present version of the system to be consistent with past versions of it.

-

Image. We expect the system to be consistent with an image that the organization wants to project, with its brand, or with its reputation.

-

Comparable Products. We expect the system to be consistent with systems that are in some way comparable. This includes other products in the same product line; competitive products, services, or systems; or products that are not in the same category but which process the same data; or alternative processes or algorithms.

-

Claims. We expect the system to be consistent with things important people say about it, whether in writing (references specifications, design documents, manuals, whiteboard sketches…) or in conversation (meetings, public announcements, lunchroom conversations…).

-

Users’ Desires. We believe that the system should be consistent with ideas about what reasonable users might want. (Update, 2014-12-05: We used to call this “user expectations”, but those expectations are typically based on the other oracles listed here, or on quality criteria that are rooted in desires; so, “user desires” it is. More on that here.)

-

Product. We expect each element of the system (or product) to be consistent with comparable elements in the same system.

-

Purpose. We expect the system to be consistent with the explicit and implicit uses to which people might put it.

-

Statutes. We expect a system to be consistent with laws or regulations that are relevant to the product or its use.

-

-

-

Feature tour

-

Complexity tour

-

Claims tour

-

Configuration tour

-

User tour

-

Testability tour

-

Scenario tour

-

Variability tour

-

Interoperability tour

-

Data tour

-

Structure tour

-

-

-

Recent: new features, new areas of code are more vulnerable

-

Core: essential functions must continue to work

-

Risk: some areas of an application pose more risk

-

Configuration sensitive: code that’s dependent on environment settings can be vulnerable

-

Repaired: bug fixes can introduce new issues

-

Chronic: some areas in an application may be perpetually sensitive to breaking

-

Conclusion

As pointed out at the beginning of the article, exploratory testing is in the middle of more rigid scripted testing and free-form adhoc testing. This balance makes it extremely useful in many situations, being able to leverage both structure and flexibility can empower us to find things we would not be able to find as easily using other testing approaches.

The best way to improve our exploratory testing is through true continuous practice - as we gain more experience about it our exploratory testing sessions will become more insightful, also we should alway to to apply the new things we learn in practice. So try applying that testing heuristic you read about recently or try using a new tool for your next exploratory testing session!

Bonus

If you’re looking to learn more about ET, there is a true treasure trove of useful discussions over the MoT Club: https://club.ministryoftesting.com/tag/exploratory-testing

Also, you can join the Exploratory Testing Slack channel, which ran by Maaret Pyhäjärvi and Ru Cindrea:

https://docs.google.com/forms/d/e/1FAIpQLSfQUlLU2agTSp0eMHj7nWMdi8eMD6-iNvdKZvIjkXP_6qAexA/viewform

About the author

Mirza has always been a technology geek, helping friends and family with computer-related issues. Started originally in tech support in 2014 and moved to software testing in 2017, and has been there since. Mirza worked as a freelance web developer for a while as well. When he’s not sharing memes online Mirza is usually learning new things, writing posts for his blog, and being an active member of the testing community.