A tester’s role in evaluating and observing AI systems

-

Locked

Senior Quality Architect

Talk Description

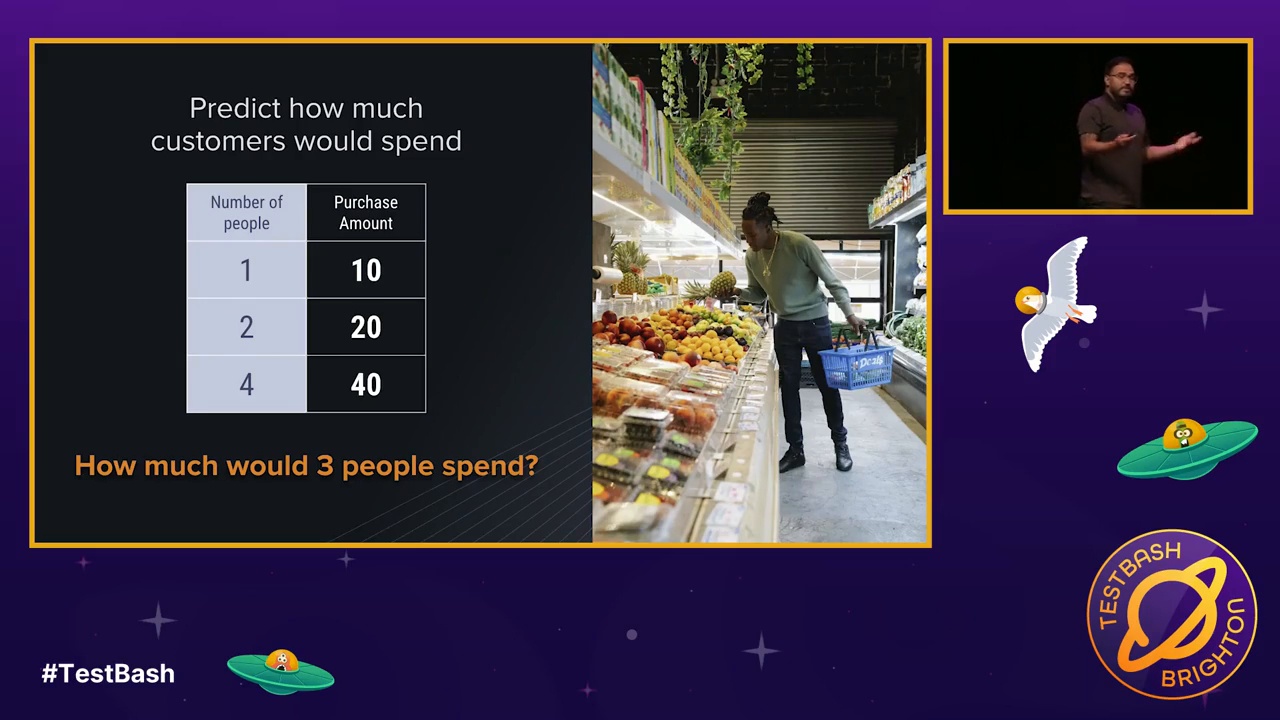

As more teams build products powered by AI models, testers have a growing opportunity to shape how these systems are evaluated and understood. In this talk, Carlos Kidman shows how testers can apply familiar testing skills to the world of AI, using LangSmith to create manual and automated evaluations, define quality attributes, and observe how AI behaves in development and production.

Through live examples, Carlos demonstrates how to design meaningful tests for non-deterministic systems, measure performance and accuracy, and add value to AI projects from design to deployment. You’ll see that many of the skills testers already have, such as analysis, evaluation, experimentation, and observation, translate directly into testing AI.

By the end of this session, you'll be able to:

- Describe a tester’s role in evaluating and observing AI systems.

- Explain how to design and run manual and automated tests for AI models using LangSmith.

- Identify useful metrics and evaluation techniques for assessing AI quality.

- Apply observability tools to monitor AI performance and behaviour in production.

Suggested Content