Most conversations about AI in testing are still stuck on the same question:

“Will AI replace testers?”

Meanwhile, the real risks are hiding in plain sight.

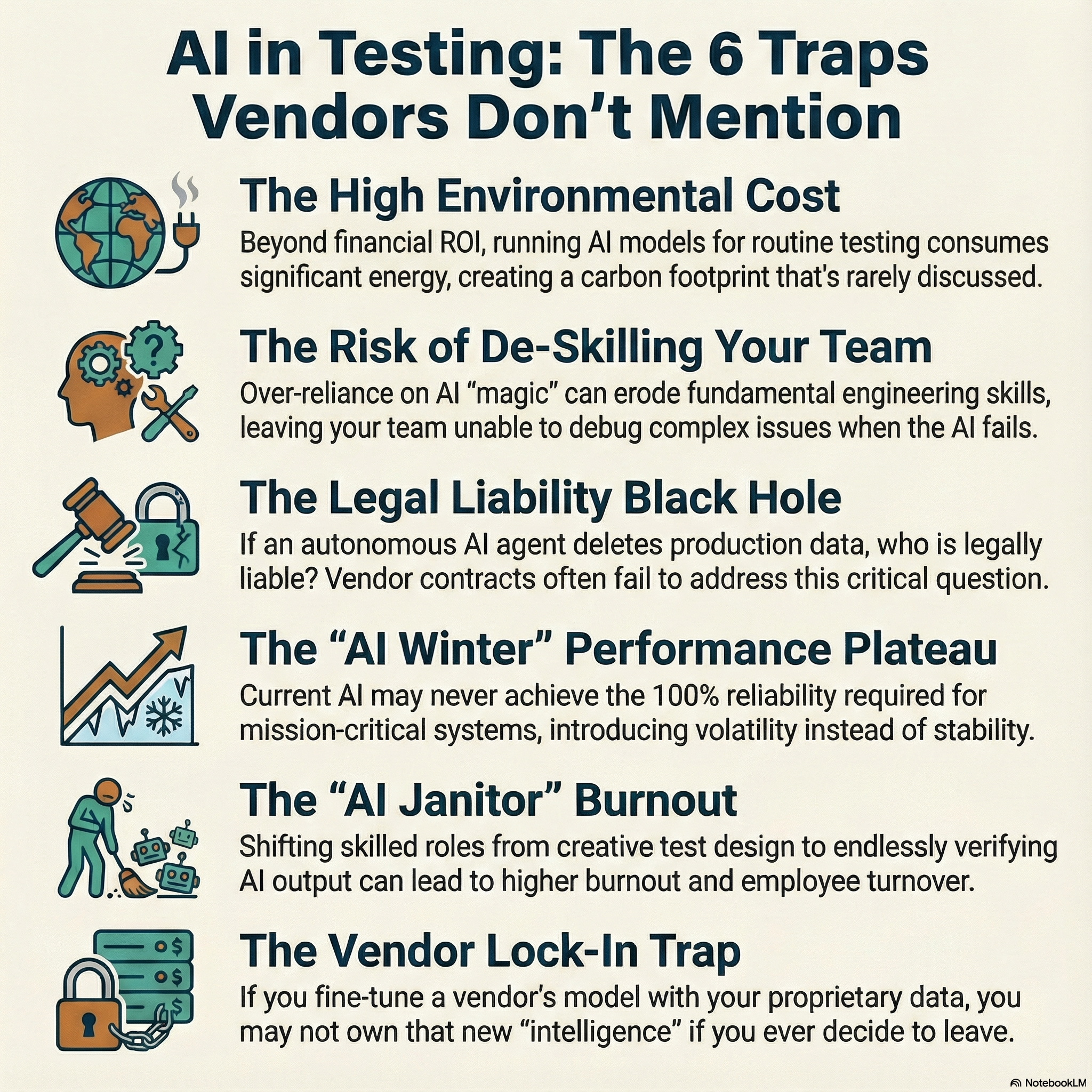

Vendors rarely talk about the deeper traps teams fall into when they adopt AI without a strategy. Things like the slow erosion of engineering skill, the legal gaps nobody wants to own, or the burnout that comes from turning testers into human janitors for AI output.

These issues are far more important than whether a model can generate a test case.

I put together an infographic with six traps I see teams run into when AI is introduced without guardrails or clear quality outcomes. None of these are reasons to avoid AI, but they are reasons to adopt it thoughtfully.

If you want AI to actually improve quality, not just create new types of waste and risk, you need to understand the trade offs before you press go.

Curious to explore this more? I’m posting more practical guidance on AI in testing over the next few weeks, including how to evaluate AI tools without falling for vendor theatre.