Ujjwal Kumar Singh

SDET @ Skeps

He/Him

I am Open to Speak, Write, Podcasting, Meet at MoTaCon 2026, Teach

Hi, I’m Ujjwal, a software tester and quality advocate. Exploring how quality works beyond tools and into systems, decisions, and trade-offs.

Substack: https://substack.com/@beinghumantester

Achievements

Certificates

Awarded for:

Achieving 5 or more Community Star badges

Activity

earned:

First Public Talk of 2026

earned:

Why Your Transition to Quality Engineering May Fail

earned:

First Public Talk of 2026

earned:

First Public Talk of 2026

Contributions

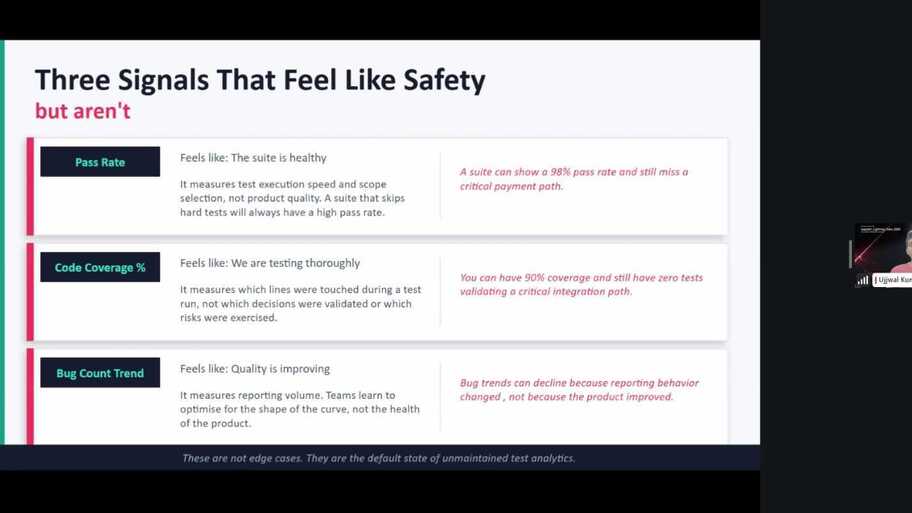

Shared my thoughts on how test analytics without decision-making is just reporting.

Test pollution happens when one test leaves behind data or a system state that affects another test. The failure appears unrelated, but the root cause is shared context leaking between tests.This usually shows up when shared data is not cleaned up, system state is reused unintentionally, or resources remain locked or modified after a test finishes. As test suites grow, these hidden dependencies create failures that are hard to reproduce and even harder to trust.For example, a user registration test creates abc@xyz.com but doesn’t delete it. Later, another test tries to register the same email and fails with “email already exists.” The second test looks broken, but the real issue is that the first test polluted the system. The failure depends on execution order, which makes it flaky and misleading.The core problem is not the assertion, it’s isolation. Reliable tests must assume they run alone. That often means rolling back test transactions, generating unique test data, or running tests in disposable environments such as containers that are destroyed after each run.One way teams uncover test pollution is by running tests in random order or executing individual tests in isolation. If results change based on order, hidden dependencies are already present.

Coverage illusion is the false sense of confidence that comes from having high test coverage numbers without actually testing what matters. It happens when metrics suggest that a system is well tested, but important behaviours, risks, or failure scenarios remain unexamined.This illusion often appears when tests focus on executing lines of code rather than validating outcomes. For example, a test may pass through a piece of logic without checking whether the result is correct, or it may avoid meaningful edge cases while still increasing coverage percentages.Coverage illusion is risky because it shifts attention from understanding behaviour to chasing numbers. Teams may believe the system is safe to release because coverage looks good, even though critical paths, integrations, or assumptions have not been tested properly.Avoiding coverage illusion means treating coverage as a signal, not a goal. Good testing looks beyond what was executed and asks what was actually verified, what could go wrong, and what evidence exists that the system behaves as intended under real conditions.

State leakage happens when data, configuration, or system state from one action, test, or user session unintentionally affects another. Instead of starting from a clean and predictable state, behaviour is influenced by something left behind earlier.This often shows up in tests that pass when run alone but fail when run together. For example, a test might rely on data created by a previous test, a user session might remain active longer than expected, or a cached value might change the outcome of a later action. The system appears inconsistent, even though the logic itself has not changed.State leakage is risky because it hides real problems. Tests may pass for the wrong reasons, failures may be hard to reproduce, and bugs may only appear in certain orders or environments. This can lead to flaky tests and false confidence in system stability.Reducing state leakage involves being clear about setup and cleanup, isolating tests where possible, and validating assumptions about system state. When state is controlled and predictable, behaviour becomes easier to reason about and failures become easier to trust.

A silent failure is a situation where a system fails to behave as expected but does not surface any visible error, alert, or warning. From the outside, everything appears to be working, even though something important has gone wrong underneath.This can happen when errors are swallowed, logs are missing, validations are skipped, or failures are handled in a way that hides their impact. For example, a background job may stop processing data, an event may never be triggered, or a user action may be ignored, without anyone being notified.Silent failures are particularly risky because they create false confidence. Tests may pass, dashboards may look healthy, and users may not notice a problem immediately. The issue is often discovered much later, sometimes only after data is lost, trust is damaged, or downstream systems break.Identifying silent failures requires testers to look beyond visible outcomes. This includes checking side effects, data changes, system state, and assumptions about what should have happened. Good observability, clear expectations, and thoughtful test design help reduce the chance of silent failures going unnoticed.

Defect Seeding is a testing technique where you deliberately add known bugs into the software to evaluate how effective our testing really is. Let's think of it as testing the test process itself.If testers or automated tests can catch these planted defects, it’s a good sign that the testing approach is working. If many of them go unnoticed, that’s a signal that coverage is weak or certain risk areas are being missed. This technique is also used to estimate how many real defects might still be hidden.For example, if testers find 15 out of 20 seeded defects, we might assume a similar detection rate for real bugs.However, this only works if the seeded defects behave like real ones, which isn’t always the case.Because it takes effort and careful planning, defect seeding is mostly used in research or controlled environments rather than day-to-day projects.

On a usual Sunday morning, you wake up, maybe with a cup of tea, and open a news portal just to see what is happening in the world. Within minutes, you find yourself reading article after article s...

Swap your team’s favourite quality superstitions, laugh at cursed demos and disappearing bugs, and turn “don’t deploy on Fridays” from folklore into smarter release habits.