Metamorphic testing

Metamorphic testing helps solve the problem of not knowing what the expected results should be. By defining relationships between inputs and outputs, we can check that AI models behave consistently, making them more reliable.

Metamorphic testing is a way to test systems, especially AI models, when you don’t know exactly what the correct output should be. Instead of checking for a “right answer,” you define rules or patterns (called metamorphic relations) that should hold true when you change the input in a specific way.

For example:

For example:

- If you slightly brighten an image, the object detection result should stay the same.

- If you replace a word with a synonym in a sentence, the sentiment analysis should still be positive.

Why is this good for us testers?

- Test systems without needing a perfect oracle (i.e., when expected outputs are unknown or hard to define).

- Catch bugs or inconsistencies in non-deterministic systems like machine learning models.

- Improve confidence in model behavior by checking for logical consistency.

- You can design smart test cases even when you don’t have labeled data.

- It’s great for exploratory testing of AI features.

- You can automate many of these checks to scale your testing.

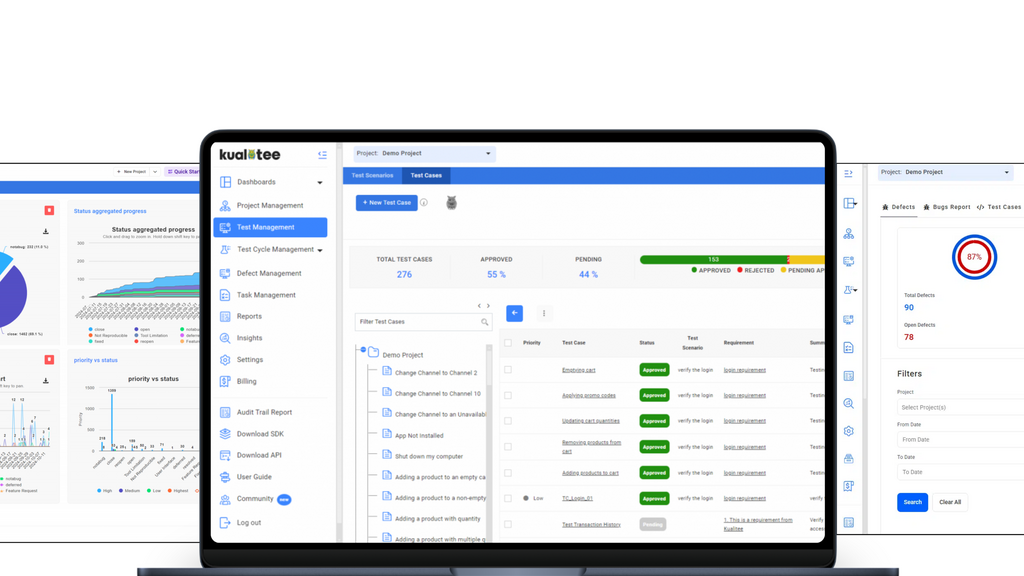

Manage your entire QA lifecycle in one place. Sync Jira, automate scripts, and use AI to accelerate your testing.

Explore MoT

Boost your career in software testing with the MoT Software Testing Essentials Certificate. Learn essential skills, from basic testing techniques to advanced risk analysis, crafted by industry experts.

Into the MoTaverse is a podcast by Ministry of Testing, hosted by Rosie Sherry, exploring the people, insights, and systems shaping quality in modern software teams.