Teams today are under relentless pressure to deliver faster releases, but speed often comes at a cost. AI-driven test generation promises a shortcut. Complex, meaningful test cases are generated in minutes, expanding coverage beyond what normal efforts and even no-code test automation platforms can achieve. At first glance, it seems like a velocity booster.

But there’s a catch. As test coverage increases, regression cycles stretch longer, CI/CD pipelines clog, and feedback slows, throttling the very speed gains AI promised. In other words, AI expands coverage, but it can turn regression cycles into an automated regression testing bottleneck. Without a strategy to optimise regression test executions, teams can find themselves trapped in longer test cycles that undermine their ability to accelerate releases.

How does AI-driven test generation improve automation?

AI-driven test automation generation scales automation by creating repeatable tests faster and with less effort in comparison to non-tool-supported test design, increasing coverage across a wide range of scenarios. Tools that leverage AI can scan code, understand API structures, or analyse UI flows to create tests that a human might overlook. However, scale also demands validation. AI-generated tests must be reviewed for accuracy, relevance, and alignment with requirements. Otherwise, volume can quickly lead to execution bloat, slower feedback, lower value, and even hallucinated scenarios. When used intentionally with human oversight and controls, AI can multiply coverage in a meaningful way.

For teams, the benefits are clear:

- High-volume coverage: Generate hundreds of test cases rapidly expanding test coverage.

- Ease of use: Simplify test design to enable adoption and scale test automation across teams.

- Accelerated workflows: Automatically generate test data, parameterise inputs, and build assertions—removing some of the most tedious and time-consuming parts of test creation.

AI test generation makes it easy to scale automated testing and boost coverage. But without managing the growing test suite, teams can quickly encounter new pain points from long regression cycles.

What is the intent behind regression testing?

Teams often assume that more tests equal higher confidence. Regression testing exists to ensure that new changes haven’t broken existing behaviour, not to run every available test simply because it exists. At its core, regression testing is about confidence and risk reduction.

High-quality regression testing should:

- Validate that core user journeys remain functional.

- Catch unintended side effects early.

- Detect integration issues across components or services.

- Provide fast, dependable feedback to developers.

Traditional regression testing is meant to be a safety net. But over time, an ever-growing number of tests weighs down that safety net. Teams end up running many, if not all, of the tests unrelated to recent changes, slowing cycles without meaningfully improving confidence.

A high-quality regression strategy needs to be optimised to:

- Ensures all recent code changes are tested.

- Executes quickly and consistently.

- Supports fast decision-making.

- Scales without slowing teams down.

AI-generated tests rapidly increase the breadth of regression suites, but not all of those tests contribute equally during each regression cycle. Executing tests unrelated to recent changes adds little value, while tests that verify changes (directly or indirectly) provide confidence. So ideally, only those need to run.

Before we optimise regression cycles, we need to frame regression as a risk-focused activity, not a volume-based one. It is not necessary to run all tests. Rather, the right ones that provide meaningful assurance that the system still works.

Why running every test can slow you down

AI test generation makes it easy to quickly build large, comprehensive regression test suites that cover edge cases and critical paths. But with a massive suite comes a new challenge: running all the tests takes significant time, and teams often don’t have the luxury to execute everything after every change.

This problem is especially acute for end-to-end web UI testing, where individual tests are resource-intensive and slow to execute. Running the full regression suite for each build can take hours or even days, significantly extending testing time. Teams may wait for feedback while pipelines are tied up, slowing the release cycle.

The challenge grows even more complex in distributed, microservices-based systems. A single change in one service can ripple across dependent services, affecting multiple flows that aren’t always obvious. Without visibility into these dependencies, teams often struggle to know which tests are needed to validate the change.

Take, for example, a leading financial services company managing a complex application built on 36 microservices. Using modern test automation tools, the team rapidly grew their regression suite to over 10,000 tests across UI, API, and database layers.

However, as the application evolved, running every test after each code change became extremely time-consuming, offsetting the gains of automated test creation. Their full regression suite could take days to execute, requiring a dedicated team just to manage test runs. Even selective, experience-based execution might still run thousands of unnecessary tests, causing delays and slowing feedback to developers.

What trade-offs do teams face when regression suites get too big?

When regression suites grow, teams often can’t run every test after each code change. Faced with limited time and slow feedback, they must balance risk and speed when deciding which tests to execute.

Typical team approaches:

-

Run more tests than necessary “just to be safe.”

While cautious, this approach slows feedback, consumes CI/CD resources, and can bottleneck release pipelines. It favours thoroughness but comes at the cost of velocity. -

Figure out which tests to run.

This involves digging through Jira tickets, tagging test cases, and reviewing commit notes, or asking developers about recent changes, and then mapping those changes to impacted tests. While it aims to reduce unnecessary execution, it is slow, error-prone, and inconsistent, leaving teams vulnerable to missed test coverage and unexpected regression failures. It can also lead teams to default to the “just to be safe” approach. -

Delay testing until right before release.

Some teams postpone running regression tests until just before a release. While this may save time initially, it increases risk. Bugs or regressions may go undetected until late in the cycle, potentially delaying release timelines due to remediation in the final stages. This not only slows feedback but also increases the cost and effort required to remediate issues, often requiring patch releases.

Each of these approaches is a trade-off between speed and risk. Either teams run too many tests and get slow feedback, rely on a potentially unreliable selection, or delay testing and face late-stage bugs with higher remediation costs. These are precisely the problems that test impact analysis (TIA) in regression testing is designed to solve.

How can teams get the speed benefits of AI without slowing feedback?

The key to unlocking real efficiency is combining AI test generation with test impact analysis. TIA can determine impact by analysing code changes, dependency relationships, and historical execution patterns to predict which tests are likely affected. For example, Parasoft's TIA uses code-coverage data to determine which tests are affected by a given code change, enabling teams to automatically and intelligently focus test execution. With this data-driven test selection, teams can achieve:

Focused AI-supported automated regression testing:

Run only the tests required to validate recent changes in each build, thereby reducing the scope of regression testing.

Faster feedback loop:

Make results available to developers faster so they can identify and fix bugs sooner.

Increase efficiency:

Free CI/CD pipelines from unnecessary test execution, preventing bottlenecks and maintaining high release velocity.

Increase confidence:

Automated, data-backed test selection increases confidence, giving teams, stakeholders and management assurance that critical changes are properly validated.

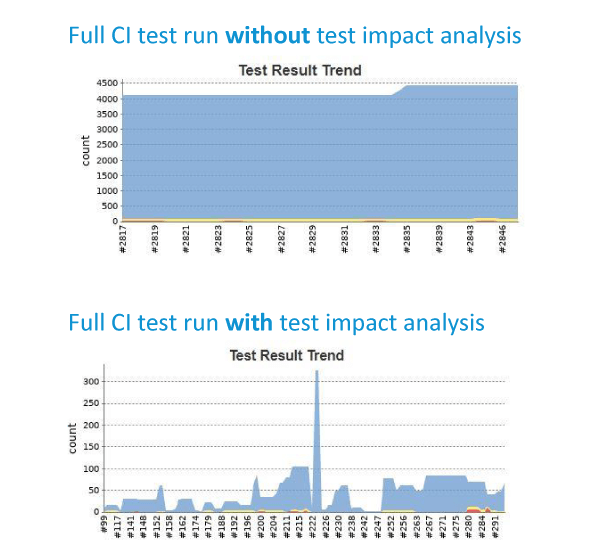

For example, the same financial services company mentioned earlier implemented TIA to manage their 10,000+ test suite across 36 microservices. Instead of running the entire suite for each code change, TIA automatically selected the tests impacted by recent changes. In one case, a small update to a single microservice required only 200 tests to be validated with confidence.

By focusing only on impacted tests, TIA reduced regression execution by roughly 98%, delivered feedback to developers much faster, and ensured no critical functionality was missed. In this way, teams can achieve the velocity promised by AI without sacrificing short regression cycles and thoroughness or risking regression failures.

Figure 1: Jenkins CI pipeline comparison chart showing test execution for pipeline runs with and without Test Impact Analysis (TIA).

What’s the key takeaway for teams?

AI test generation is undeniably powerful. It can produce more tests, cover more scenarios, and uncover bugs that might otherwise go unnoticed. But as we’ve seen, more tests alone don’t equal faster releases.

Real efficiency comes from pairing AI with intelligent test-execution strategies, such as test impact analysis, to ensure the right tests run at the right time. Teams that adopt this approach can harness the promise of AI without its unintended consequences slowing them down.

By embracing both innovation and strategy, teams can deliver faster, safer, and smarter releases, exactly what today’s business demands.

To sum up

AI-driven test generation can help teams expand coverage by accelerating test creation, enabling them to catch bugs that might otherwise go unnoticed. But more tests alone do not automatically guarantee faster releases. The more tests you have, the longer it takes to run a full regression, delaying test feedback and remediation. This limits the impact AI-driven test generation has on release velocity. When we introduce intelligent test execution, we can manage those potentially massive regression suites that could slow feedback, clog pipelines, and undermine the velocity AI promises.

The real power comes from pairing AI-generated tests with data-driven strategies like test impact analysis (TIA). By focusing only on tests affected by recent changes, teams can:

- Run faster, more efficient regressions

- Maintain confidence in critical functionality

- Ensure AI-generated code changes are validated efficiently

- Reduce CI/CD bottlenecks

- Deliver frequent, high-quality releases

In short, AI is a multiplier for meaningful coverage in testing. Combined with intelligent test execution, it turns volume into velocity, giving teams faster, safer releases without sacrificing quality.

What do you think?

AI test generation can drive coverage, but how do you make it work for speed and confidence in your own team? Share your thoughts, ideas, or experiences.

Reflect on these questions:

- Do you often have to wait for regression testing feedback due to the volume of test cases being executed?

- How do you decide which tests to run for each change and which tests can be skipped?

- Have you tried combining AI test generation with data-driven test selection? What worked, or didn’t?

Take action:

- Measure how long your current regression cycles take.

- Monitor the growth of your regression test suite as you embrace AI-accelerated test creation.

- Track how test impact analysis accelerates feedback loops, increases confidence, and improves pipeline efficiency.

- Explore pairing AI-generated tests with TIA or other intelligent execution approaches.

References:

- Parasoft test impact analysis tools

- Back to the basics: Rethinking how we use AI in testing, Konstantinos Konstantakopoulos

- Five practical ways to use AI as a partner in Quality Engineering, Daria Tsion

- How To Do Good Regression Testing, Mark Winteringham