Activity (50)

Article (335)

Ask Me Anything (55)

Chat (72)

Club Topic (28)

Course (37)

Discussion Panel (44)

Feature Spotlight (58)

Term (608)

Into The MoTaverse Episode (12)

Certification (83)

Collection (251)

Masterclass (92)

Meme (234)

Memory (3480)

Newsletter Issue (230)

News (329)

Satellite (2892)

Solution (81)

Talk (226)

MoTaCon Session (872)

Testing Planet Session (105)

Insight (210)

TWiQ Episode (295)

The National Cyber security Centre (NCSC) define penetration testing as: a method for gaining assurance in the security of an IT system by attempting to breach some or all of that system's security, using the same tools and techniques as an adversary might. Penetration testing should be viewed as a method for gaining assurance in your organisation's vulnerability assessment and management processes, not as a primary method for identifying vulnerabilities. A penetration test should be thought of as similar to a financial audit. Your finance team tracks expenditure and income day to day. An audit by an external group ensures that your internal team's processes are sufficient.

Cognitive load refers to the amount of mental effort required to perform a task, including everything a tester must keep in mind while testing, such as requirements, system behaviour, test data, environments, tools, expected results, and potential failure modes. Cognitive load is often described in three different forms:

Intrinsic cognitive load: This comes from the inherent complexity of the system being tested. Distributed systems, complex business rules, edge cases, and integrations naturally demand more mental effort.

Extraneous cognitive load: This is unnecessary mental effort caused by poor tooling, unclear requirements, fragmented documentation, inconsistent environments, or inefficient processes. Unlike intrinsic load, this type is avoidable.

Germane cognitive load: This is the productive mental effort spent learning, problem-solving, and building mental models of the system. This is the load on which we want testers to spend their energy.

It would be impossible to eliminate cognitive load entirely. Instead, effective testing requires reducing extraneous load so testers can devote their finite mental capacity to meaningful analysis and exploration.

The principle of Bug Clustering states that the majority of defects are often concentrated in a small number of modules or components of a software system. It can be seen as an application of the Pareto Principle or the 80/20 Rule in the context of software testing. Bugs are not uniformly distributed throughout the software. Instead, a disproportionately large number of defects "cluster" in a small percentage of the code. The 80/20 Rule: Empirically, it is often observed that approximately 80% of the defects in a software application are found in only 20% of the modules. This clustering usually occurs in high complexity areas, such as, modules with intricate business logic, numerous interdependencies, or complex algorithms are harder to test and maintain, making them more prone to error. Bug clustering can also happen due to code that is frequently modified, updated, or undergoing new feature development tends to introduce new bugs more often and in areas that rely on third-party libraries or old, poorly documented codebases.

The primary purpose of a stack trace is to be used in debugging to help developers locate the exact line of code and the sequence of function calls that led to a specific error, exception, or crash. In practice, it is a list that shows the call stack—the history of nested function calls—starting from the currently executing function (where the error occurred) and tracing backward to the function that initially called it, and so on, back to the program's entry point. Each line in the stack trace represents a stack frame and typically includes:

The function or method name.

The file name where the function is defined.

The line number within that file.

In summary, the developers use stack traces to find what part of the code caused a failure.

The Bug Escape Rate is a critical quality metric in software testing that measures the effectiveness of the software testing process before a product release. The bug escape rate is the percentage or ratio of defects that were missed by the team(s) and subsequently discovered by end-users or customers in a production environment after the software has been deployed. It is typically calculated when we divide the number of bug found after release (escaped bugs) by the total number of bugs found (escaped bugs + bugs found before release) and multiply by 100. So if the team found 100 bugs, but after deploying to production customers report 10 additional bugs, the escape rate would be calculated as: (10 escaped defects) / (100 detected defects + 10 escaped defects) = 9%. Having a low escape rate is the primary goal. A low number indicates that the testing efforts were effective and successfully caught most defects before the public release. High escape rate suggests major issues with our processes, such as insufficient test coverage, incorrect testing environments, poor test prioritization, or a lack of focus on critical user paths. In essence, the escape rate tells you how many bugs slipped past testing.

A data contract is a document that defines the ownership, structure, semantics, quality, and terms of use for exchanging data between a data producer and their consumers. It is human- and machine-readable, and so can be used as both a communication tool between teams and a way to automatically detect when expectations about data are broken.

Write-Audit-Publish (WAP) is a pattern for designing data pipelines where a pipeline is built up of several sections. Each section produces a result data set that is used by one or more downstream sections and conforms to the same three-stage process:

Write: The main work of the section is done and data is written to a staging area that is inaccessible to other sections

Audit: The staging data is checked using automated checks

Publish: Only data that passes the audit is published to downstream sections of the pipeline

Medallion data architecture is a way of splitting the ingestion and processing of data into three stages: bronze, silver, and gold.

In the bronze stage, ingested data is stored in its unaltered form.

The silver stage attempts to fix problems in the bronze data and augment it by linking it with other data, producing a more usable version.

The gold stage takes the silver data and summarises it, along with any other processing needed to make the data ready for consumption by downstream processes.

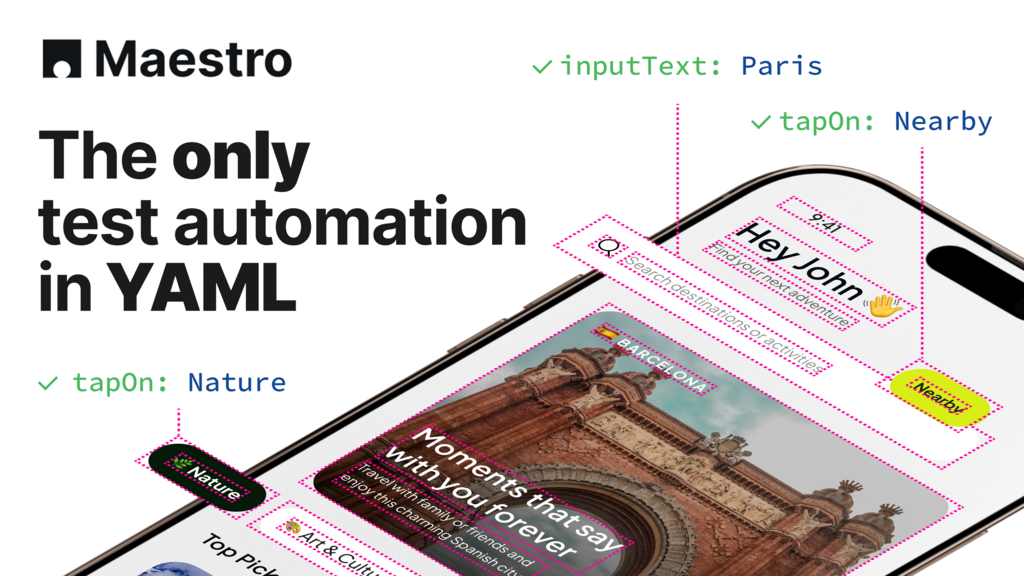

YAML is a way of expressing structured data that is both machine-readable and human-readable, similar to JSON. It is often used for configuration and contracts that need to be understood and maintained by people as well as systems.

A Compact Disc (CD) is a digital optical disc data storage format that was co-developed by Philips and Sony to store and play digital audio recordings. In terms of data capacity, standard CDs can hold up to 700 MB of data or roughly 80 minutes of uncompressed audio. The mechanism for storing data works in a way that the microscopic "pits" and "lands" on a reflective layer, which a laser beam inside the CD player reads. Unlike vinyl or cassette tapes, CDs are non-contact media, meaning the quality does not degrade with each playback. Over time, CDs got supplemented by DVDs and Blu-ray disks, which are similar in appearance but with larger capacity, and can store video content of much higher quality. The rise of the internet, streaming, online gaming, etc., all contributed to the decline in the use of CDs.

A Floppy Disk (or diskette) is a legacy magnetic storage medium that was the standard for moving and backing up computer data from the 1970s through the late 1990s. It consists of a thin, flexible ("floppy") magnetic disk sealed inside a protective plastic enclosure. Unlike the CD, which uses lasers, a floppy disk works by using a mechanical arm with a magnetic head to read and write data directly onto the spinning surface. The most widely known floppy disk was the 3.5” one. While floppy disks were revolutionary for being rewritable and portable, they were eventually replaced by CDs and USB drives for several reasons:

Low Capacity: As files (like photos and software) grew in size, the 1.44 MB limit became too small

Fragility: The magnetic surface was easily damaged by dust, magnets, or heat.

Slow Speed: Data transfer was significantly slower than optical or flash storage.

Despite being obsolete in modern computing, the 3.5-inch floppy disk lives on globally as the universal "Save" icon in almost every software application.

The Three Amigos is a collaborative approach used in Behavior-Driven Development (BDD) to make sure everyone on a project has a shared understanding of a feature before any code is written. It brings together three distinct perspectives to bridge the gap between business requirements and technical implementation. The three unique perspectives The "Three Amigos" typically represents three roles (though it can be more than three people):

The business (Product Owner/Business Analyst):

Focus: What problem are we solving? What is the value?

Role: Defines the "What" and provides the requirements.

The developer:

Focus: How will we build this? What are the technical constraints?

Role: Discusses implementation and technical feasibility.

The tester:

Focus: What could go wrong? What about the edge cases?

Role: Ensures the feature is verifiable and "breaks" the logic early.

How does it work? Instead of a long document, the Amigos meet for a short session (often 15–30 minutes) to discuss a specific user story.

Scenario Mapping: They turn requirements into concrete examples.

Gherkin Syntax: These examples are often written in the Given/When/Then format.

The Goal: By the end of the meeting, the team should have a set of clear, unambiguous "Acceptance Criteria" that serve as both the guide for development and the blueprint for automated tests.

Key benefits

Prevents rework: Issues are caught during the conversation rather than during the testing phase.

Shared understanding: Eliminates the "that's not what I meant" friction between departments.

Living documentation: The output (the scenarios) becomes a readable record of how the system is supposed to behave.

The outcome: The Three Amigos turn a vague idea into a "DoR" (the Definition of Ready), meaning the developers can start coding with total clarity, which significantly reduces the chance of a wrong understanding of the actual requirements.

Create, run, and maintain web and mobile tests with no-code, AI-driven automation in the cloud

Jira Issue Connect brings live, real-time Jira data directly into TestRail, eliminating tab-switching and stale fields.

Complete the Sembi Quality Ops Survey for a chance to win $100—and have your voice heard in our upcoming industry report

Create E2E tests visually. Get clear, readable YAML you can actually maintain.